Every B2B marketing leader has heard the warnings by now. Google traffic is declining. ChatGPT is replacing search for research. Your buyers are asking LLMs for vendor recommendations, and you have no idea if your brand even shows up.

The SEO tool vendors see this shift too. SEMrush, Ahrefs, and newcomers like Mentions are rolling out "AI visibility" features that promise to track how often your brand appears in LLM responses. The pitch is compelling: if your buyers are using AI to research solutions, shouldn't you know whether ChatGPT recommends your competitors instead of you?

We decided to test these tools across our own properties and several client sites. Not to write an official vendor comparison (yet), but to answer a practical question: is there any real benefit to investing in AI visibility tracking today?

The short answer: not yet.

The tools show promise, but the data infrastructure isn't there. More importantly, anyone claiming they've cracked the code on how to manipulate LLM visibility is lying. These systems are still largely a black box, and the theories about how to game them are just that: theories.

What we found, and what you should focus on instead.

The Current State of AI Visibility Tools

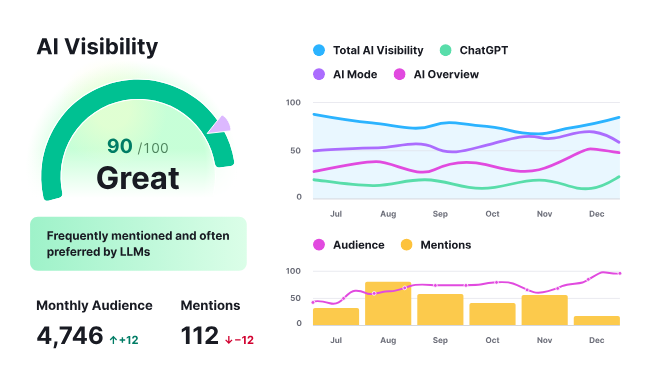

The concept makes sense in theory. Just like you track keyword rankings in Google, you should track "prompt rankings" in ChatGPT, Claude, Gemini, and Perplexity. You want to know how often your brand appears, what prompts trigger mentions, and how you stack up against competitors.

The tools we tested claim to deliver these insights. In practice, they provide dashboard scores without the substance you need to act on them.

Zero Visibility Doesn't Mean Zero Impact

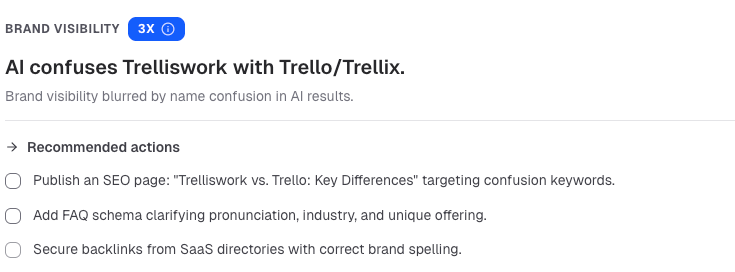

If you're not a household brand with massive search volume, expect to see mostly zeros in your dashboard. The tools assign you an "AI visibility score," but provide almost no context for what drives that number.

One client site we tested scored a 0. Their direct competitor scored a 35/100. Sounds concerning, right? Except there's no breakdown of which prompts drove that 35, how many actual appearances that represents, or what content made them visible. You can't reverse engineer success when the underlying data isn't exposed.

This isn't like traditional SEO, where you can identify long-tail keywords, see monthly search volume, and build an optimization plan. The LLM vendors aren't sharing prompt data at scale. OpenAI doesn't provide a "Search Console for ChatGPT." Neither does Anthropic for Claude, or Google for Gemini.

The AI visibility tools are trying to fill a gap that's mostly still empty.

The Data Problem Is Foundational

These tools are building on quicksand.

Google Search Console exists because Google wants site owners to improve their content. Better content creates better search results. The incentive structure works for everyone.

LLM providers haven't opened up that same transparency yet. You can't see which prompts mentioned your brand, how often you appeared in responses, what context surrounded those mentions, or whether users took action afterward.

Some tools are scraping what they can or running test prompts at scale to simulate visibility. But it's a thin dataset compared to the depth available for traditional search. Until the LLM vendors provide real transparency, these tracking dashboards are measuring shadows.

One Thing Works: The ICP Exercise

Mentions does something valuable that has nothing to do with tracking. Their onboarding takes a crack at creating your ideal customer profile after some general questions. It identifies competitors, articulates differentiators, and maps the questions buyers naturally ask based on services and does a decent job of it so you can quickly edit or replace what it creates.

This exercise matters. It pushes you to think about brand positioning in the context of conversational queries, not just keyword strings. If someone asks an LLM, "What tools help B2B companies improve pipeline visibility without adding headcount?" how should your brand be described in that response?

That's a strategic question worth answering, regardless of whether you can track the results.

But after building that foundation, the tool primarily tracks prompts that explicitly mention your company name. Of course you appear in those results. The more valuable question is whether you surface in category-level or problem-level queries where your name isn't mentioned at all.

Those are the prompts that drive net-new awareness. And the tools can't reliably track them yet.

Traditional SEO Vendors Are Adding Bolt-On Features

SEMrush and Ahrefs are layering AI visibility modules into their existing platforms. On the surface, this seems efficient. You already pay for these tools, so why not get AI metrics in the same dashboard?

The risk is they're applying an SEO framework to a fundamentally different problem. AI visibility isn't just about keywords and backlinks. It's about how your brand narrative gets synthesized into conversational responses. It's about authenticity, context, and the authority signals that LLMs use to decide what's worth citing.

If you optimize for trigger words without understanding how LLMs construct answers, you might improve a score that doesn't correlate with actual buyer influence.

What You Should Do Instead

If the tracking tools aren't ready, what's the alternative? Focus on the fundamentals that drive AI visibility, whether you can measure it precisely or not.

Build Content That LLMs Want to Reference

LLMs are trained on public content and retrieve from indexed sources during inference. If your content is thin, generic, or keyword-stuffed, it won't surface in AI responses no matter what your dashboard says.

Write content that demonstrates real expertise. Address specific buyer problems with depth. Provide clear points of view backed by experience. This is what gets cited when an LLM synthesizes an answer.

Forget the tricks. There are no tricks yet. Anyone who tells you they've reverse-engineered the ranking algorithm for ChatGPT is selling you something they don't have. These systems are black boxes, and the theories floating around are mostly speculation dressed up as strategy.

What works is the same thing that's always worked: create content valuable enough that it becomes a source of truth in your space.

Make Your Content Crawlable and Structured

LLMs need to access your content to reference it. That means basic technical hygiene matters more than ever.

Ensure your site is crawlable. Use clear URL structures. Format your pages with proper headings, lists, and semantic HTML. Make it easy for both traditional search engines and AI systems to parse what you're saying.

If you have key service pages, product explainers, or methodology documentation, structure them clearly. Use headings to break up sections. Include definitions for important terms. Link to authoritative sources that support your claims.

This isn't new advice. It's just more important now.

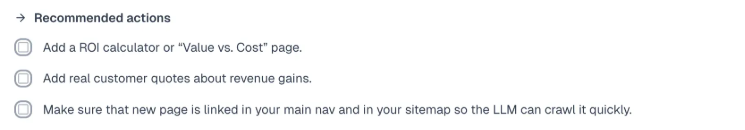

More kudos to Mentions in this feature area, they provided the most depth on suggestions of structured content that might improve your “score.” Most were obvious: write about the topics that involve your services or customer problems identified in their ICP analysis. However, Mentions also attempted to diagnose general some visibility problems with your brand and suggested content pieces unrelated to services (e.g. write a blog post specifically focused on who is Trelliswork so that the LLMs can fill in the gaps on what they glean from pages, FAQs, and services pages).

Link to Authoritative Sources (And Earn Backlinks)

LLMs weight authority when deciding what to include in responses. That authority comes partly from who links to you and who you link to.

Build relationships with credible sources in your industry. Contribute to publications that matter. Get cited in research reports, analyst briefs, and case studies from recognized firms.

When you publish your own content, link out to authoritative sources that support your points. This isn't just good practice for readers. It signals to AI systems that your content exists in a network of credible information.

Define How You Want to Be Described

Think about how you want an LLM to describe your company when someone asks about your category. What's your core differentiation? What problems do you solve that competitors don't? How would you explain your value in two clear sentences?

Document this. Make it public. Repeat it consistently across your site, case studies, thought leadership, and any content you control.

LLMs synthesize from available sources. If your positioning is clear and consistent everywhere, that's what gets reflected in AI responses. If it's muddled or contradictory, the LLM will struggle to represent you accurately.

Test Your Own Visibility Manually

You don't need a paid tool to understand your AI presence. Open ChatGPT, Claude, Perplexity, or Gemini. Ask the questions your buyers would ask. See what shows up.

Try variations:

- "What are the best tools for [your category]?"

- "How do B2B companies solve [problem you address]?"

- "What should I look for when evaluating [your solution type]?"

Does your brand appear? If yes, how is it described? If no, look at what does appear. What made that content authoritative enough to reference? What sources get cited?

Reverse engineer those patterns. Look at the structure, depth, linking behavior, and positioning of the content that wins. Then build your own content strategy around those observations.

This is manual and time-consuming. But it's more actionable than a dashboard score you can't interpret.

Promote Your Content in Traditional Ways

AI visibility doesn't replace traditional distribution. It complements it.

Keep promoting your content through email, social, partner channels, and any other distribution you've built. The more your content gets read, shared, and linked to, the stronger the authority signals become. Those signals matter for both traditional search and AI discoverability.

Don't abandon what works in pursuit of a new metric you can't control yet.

The Vanity Metrics Problem

You could add these AI visibility tools to your stack today, get a score, and have no idea what it means or what to do about it.

If your score is high, great. But why? If it's low, what's the actual fix? The tools don't provide enough depth to connect visibility to action.

This is dangerous for marketing leaders who need to justify spend and show progress. A static or declining AI visibility score without context creates pressure to "do something" without clarity on what that something should be.

You risk adding another dashboard that looks important but doesn't drive real decisions. That's the definition of a vanity metric.

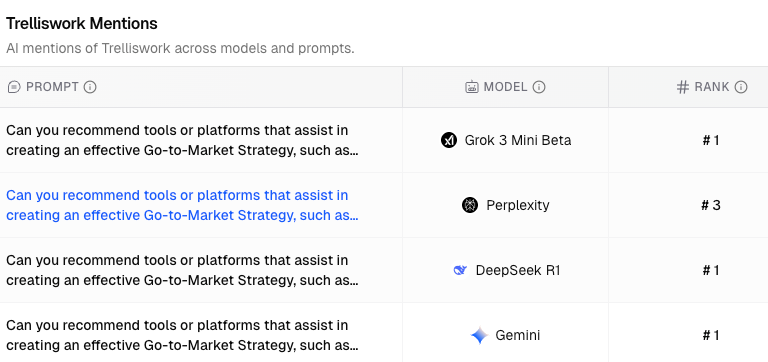

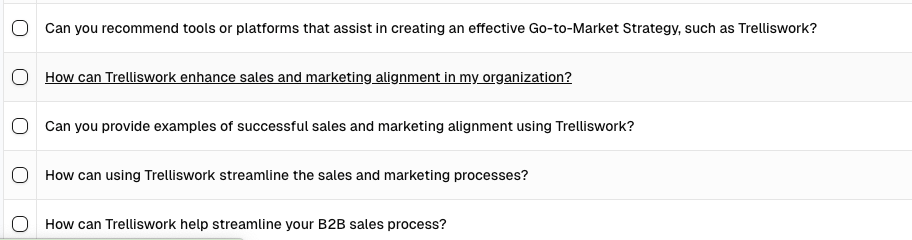

Our score has ranged from 30-80%, but when you dig deeper – you start to see why. It is giving us credit for questions and response that really aren’t real. No one would ask these questions about Trelliswork:

What to Watch For as These Tools Mature

These tools will no doubt get a lot better as LLM providers open up more transparency. When that happens, AI visibility tracking will become essential infrastructure, just like SEO tools are today.

What needs to happen for these tools to cross the threshold from "interesting" to "must-have":

Real prompt-level data. You need to see which specific prompts triggered your brand, how often, and in what context. Not aggregated scores, not when your brand was in the question from the start, but granular visibility into what's working to find you in the haystack.

Actionable recommendations. The tools need to analyze why certain content surfaces and provide specific guidance on what to change. "Improve your AI visibility score" isn't helpful. "Add more structured data to your service pages and link to these three authority sources" is.

Competitive context that matters. Knowing your competitor scored higher is useless without understanding what they did to earn that score. The tools need to surface the content, structure, and positioning differences that drive visibility gaps.

Validation that scores correlate with outcomes. Until there's proof that a higher AI visibility score leads to more inbound interest, pipeline, or revenue, these metrics remain theoretical. The tools need to connect their scores to business impact. We all expect this to change quickly so that the LLM providers can monetize beyond a paid chat interface.

Set a calendar reminder to revisit this space in 3-6 months.

Where We Land

AI visibility tools are trying to solve a real problem. Buyer behavior is shifting toward AI-supported research, and you need to understand your presence in that environment.

But the infrastructure to track and optimize that presence is still too early. The tools from Mentions, SEMrush, and Ahrefs show the right strategic thinking. They understand what needs to be measured. They're building the frameworks and interfaces. The underlying data layer just isn't robust enough yet to deliver actionable value for most B2B brands. Although, as noted above we think Mentions.so is leaping ahead because it appears to have been designed from the start for this task. We are excited to watch this platform continue expanding.

If you're a high-volume, high-recognition brand, you might extract some directional insights. For everyone else, you're better off investing in the fundamentals : deep content, clear positioning, strong technical structure, and authentic authority building.

We'll keep testing these tools as they evolve. When the data catches up to the dashboards, we'll be the first to tell you. Just not today.

.png)